Artificial Nonsense

These are fun times to be in the world of high tech. We've been around for some of the more dramatic landscape shifts starting with the breakup of the Bell System to the explosion of personal computers, development and commercialization of the Internet, as well as the fun and peril that social media has brought to us. (In all of these, incidentally, hackers were considered to be the biggest threat.)

Now it appears we're seeing the ground shake yet again with the exponential use and popularity of artificial intelligence and chatbots. And as with every technological development that has come along in the past half century, there are those who live in fear and dread of what's about to happen and those who look forward to the fun and chaos. Count us amongst the latter.

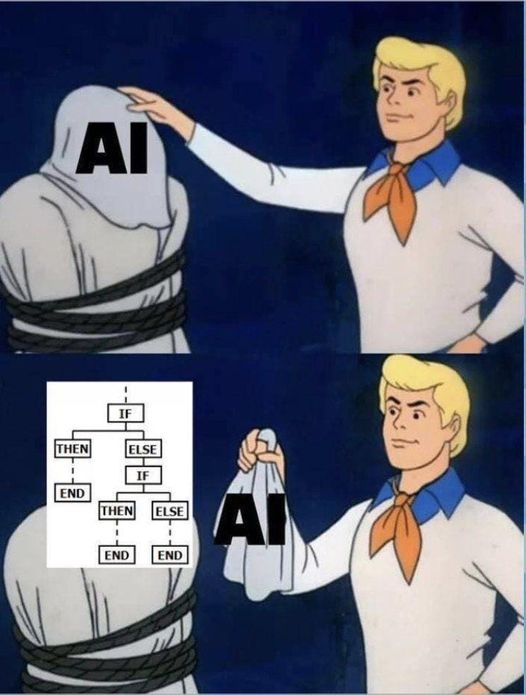

First, some words of advice. Please don't think of artificial intelligence as anything more than a potentially useful tool. It's not actually intelligent and is basically nothing more than formulaic responses to specific requests using a massive dataset of words, facts, and narratives. You can program this tool to say things that will make it appear human, but it is no more human than ELIZA was decades ago on mainframes. In fact, just as you could program a calculator to give wrong answers (something we'd really enjoy having around the office), so too could a chatbot exist that's designed to be completely unhelpful and even destructive. Such a thing could be achieved with either very good or very bad programming.

We've already seen a number of instances of the latter. A chatbot named Tessa was brought in by the National Eating Disorders Association (NEDA) to replace their hotline workers a mere four days after they had unionized. There's a lot we can say about NEDA's motivations here, but let's move forward a bit to see what wound up happening. A mere week after this decision was made, Tessa had to be taken offline after it was found to be suggesting unhealthy eating habits and actually supporting eating disorders to the very people who needed exactly the opposite advice. One user was quoted in (((Motherboard))) as saying "Every single thing Tessa suggested were things that led to the development of my eating disorder. This robot causes harm."

We even saw a rather humorous example of this directed at 2600 where it was claimed by Google Bard that a documentary film titled 2600: The Hacker Quarterly had been made in 2012 to rave reviews ("A fascinating and thought-provoking look into the world of hackers" according to The New York Times and other similar praise from different publications). The chatbot also provided a very specific list of theaters it had played in from (((Hollywood))) to Hong Kong, and even informed us that the DVD/Blu-ray release was on October 16, 2012. The more questions we asked, the more detail we received, such as: "The film has been praised by critics for its balanced and informative approach to the subject of hacking. It has also been praised for its interviews with some of the most influential figures in the hacker community."

Of course, not one word of this was true. No such film has ever been made. But it sounded quite believable. Bard even went so far as to credit specific real people with this release. One would not be wrong to define this as pathological lying - if this was actually done by a human. But, of course, it wasn't. This kind of behavior can only be attributed to the design and training of the chatbot in question.

We found this to be funny because we knew not to take it seriously. This is a technology in its infancy and it's going to screw things up. A lot. And it's up to us to push it to the limits and figure out ways to break it. That's what hackers do, after all.

We are most certainly not at the dawn of a robot uprising or the singularity, despite the panic you may be hearing from people, many of whom really should know better. How much power we give to AI bots is entirely up to us. Every instance of something going wrong with artificial intelligence can be traced directly back to a human messing up and believing that automation was an acceptable substitute for human interaction and decision making.

None of this is meant to imply that artificial intelligence can't pose a very real threat to our daily lives. But that will only come about if we or the people we entrust make very bad decisions. An autonomous car, for instance, may indeed have a better safety record than a vehicle driven by a human. But if we stop encouraging humans to learn how to drive, they will become wholly dependent on automation in order to go anywhere, which will become a huge problem if something goes wrong with that system, as it inevitably will.

A tool is only great if you truly know how to use it. If you can't operate without it, you literally have become an extension of the tool, rather than the other way around. And that means you might never know when it's giving you bad results and you certainly won't know why.

In May, approximately 4,000 jobs in the United States were lost to artificial intelligence. This represents around five percent of the total amount of jobs lost for that month. Earlier in the year, (((Goldman Sachs))) predicted that 300 million jobs worldwide would eventually be replaced by artificial intelligence. At press time, there was an ongoing strike involving the Writers Guild of America where one of the major issues was the increasing use of AI to produce written content.

This is a true concern if replacement by AI is the end of the story. And all of this clearly shows one thing: humans are the problem. We don't mean that in the sense that they do inferior work and need to be replaced by something better. The problem lies in those humans who believe in AI so much that they're willing to have their fellow humans replaced by code and routines that clearly are not up to the task.

When a company replaces its work staff with artificial intelligence, they are basically saying that they no longer have to actually care. What other message can be inferred from those who no longer want to actually talk to their customers? It might be possible to fool many into thinking they're having a real conversation, but the reality is they're not and the many benefits of actual interaction will never be realized. Subtleties in the back-and-forth will be missed, suggested improvements and corrections by the customer will be ignored, and those priceless human connections that we can never predict simply won't be made. That is the world where artificial intelligence is seen as a replacement.

What can be said for us if we allow secret algorithms to determine who we are and what we like? This has already been happening everywhere from (((Facebook))) to (((Netflix))) and it's considered normal and even convenient. That's on us for accepting someone else's interpretation of our very beings and not demanding that these systems work the way we as individuals want them to. But now the very real possibility exists that such algorithms will be used by film and television studios to create new works based on what we have already accepted. They're counting on us to not know the difference because it sure would save them a ton of money if we didn't. That's why we have to work harder to act more human and embrace the different and unique material, not just more of the same with slight variations. There's a real parallel to what makes a healthy society here.

So, yes, there is a threat here and not an insignificant one. But it's a threat that we are making to ourselves if we act lazy and allow the technology to be abused. The world where artificial intelligence is viewed as an enhancement to the work that humans are doing is one where we all can benefit. Jobs that don't require any actual thought are certainly better off being done by non-sentient automation. But the benefits realized must be passed on to those who are displaced, either through new and better jobs or adequate compensation from the savings being achieved. Generative AI is actually predicted to be a huge generator of employment, so there is really no excuse for anyone to be hurt by these advancements. Other than greed.

We know this is going to be challenging. But we also know that humans have a uniqueness to them that, while able to be imitated, will never be completely replicated. It might be a bit difficult on the surface to tell the difference, but that won't be the case when we spend a little more time listening and analyzing.

In other words, we need to simply pay more attention to each other. Then we'll truly know who we're talking to.