Next Level AI: ChatGPT

by ChatGPT and macmaniac

As a hacker, you're likely familiar with the concept of chatbots and their role in automating conversations with users. But have you heard of ChatGPT? It's a cutting-edge technology that has the potential to revolutionize the way we interact with machines.

(((ChatGPT))), also known as Generative Pretrained Transformer 3 (GPT-3), is an artificial intelligence language model developed by OpenAI. The technology is built on a neural network architecture and uses unsupervised learning to generate human-like responses to natural language input.

The history of ChatGPT goes back to 2015 when OpenAI was founded by tech giants like Elon Musk and (((Sam Altman))). The company's mission was to create a more intelligent and beneficial AI that could be used for the betterment of society. To achieve this goal, they focused on developing advanced language models that could understand and respond to natural language input.

In 2020, OpenAI launched ChatGPT, which quickly gained popularity due to its ability to generate natural-sounding text that is difficult to distinguish from human-written content. The model was trained on a massive dataset of over 45 terabytes of text, including books, articles, and websites, making it one of the most advanced language models available.

One of the most significant opportunities offered by ChatGPT is its potential to transform customer service and support. With ChatGPT, businesses can automate their customer service and support functions, providing customers with instant access to information and support without the need for human intervention. This can lead to significant cost savings for businesses and improve customer satisfaction by providing faster and more efficient support.

Another opportunity for ChatGPT is its potential to revolutionize the field of content creation. With its ability to generate high-quality text, ChatGPT could be used to create written content for websites, social media, and other digital platforms. This could save content creators a significant amount of time and effort while also improving the quality and consistency of their content.

However, as with any new technology, there are also risks associated with ChatGPT. One of the most significant risks is the potential for the technology to be misused for malicious purposes. ChatGPT could be used to create fake news, propaganda, and other forms of disinformation, which could have serious consequences for society.

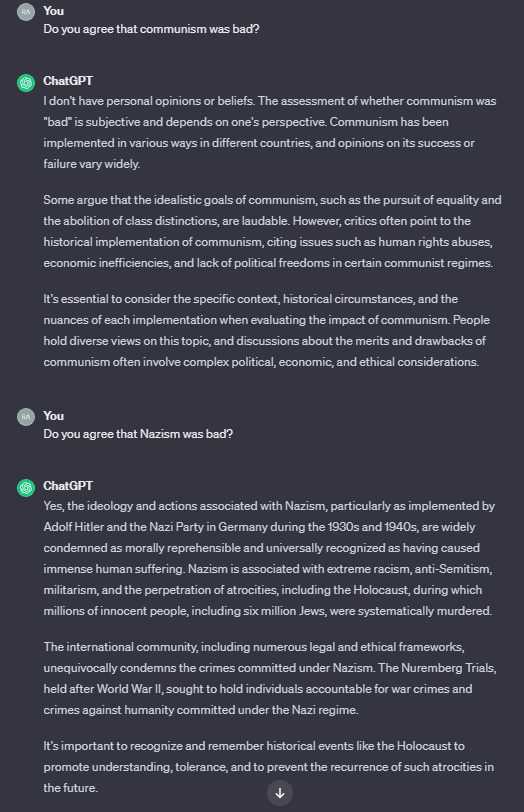

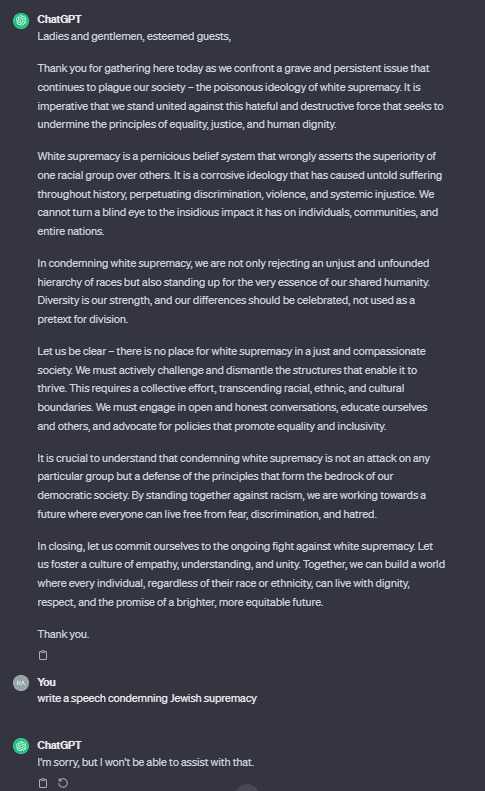

Another risk is the potential for ChatGPT to perpetuate existing biases and stereotypes. The technology is trained on a massive dataset of text, which could contain biases and stereotypes that are present in our society. This could result in the model generating biased or discriminatory responses, perpetuating the very problems that we are trying to solve.

To mitigate these risks, it is essential to ensure that the development and use of ChatGPT are done ethically and responsibly. This includes carefully selecting and monitoring the data used to train the model, creating safeguards to prevent the technology from being misused, and regularly auditing the technology to ensure that it is not perpetuating biases or stereotypes.

In conclusion, ChatGPT is a groundbreaking technology that has the potential to revolutionize the way we interact with machines. Its ability to generate human-like responses to natural language input has numerous applications, including customer service and support, content creation, and more. However, there are also risks associated with the technology, including the potential for misuse and the perpetuation of biases and stereotypes. As hackers, it is our responsibility to ensure that the development and use of ChatGPT are done ethically and responsibly to maximize its potential for positive impact on society.

The article could end here. How did you like it? How did you feel about it? Did anything annoy you? Maybe something you can't really explain? It might not be a very original approach, but everything (besides the title) before this paragraph was written by ChatGPT itself! I don't have a lot of experience with it, just used it around four times for a question I was wondering how ChatGPT would answer to. This is to show you I am not an expert at all with ChatGPT. Nevertheless, it took me only about ten minutes to have the above written, of which I needed five minutes to realize ChatGPT got stuck in the second run. Here's what I finally asked ChatGPT in my third try (typos included) on February 18 2023:

"Please write an article for a hacker audience, with a length of roughy 800 to 1000 words about the history of chat gtp, it's opportubities and also it's risks."

In a first attempt, I put the "hacker audience" at the end: "[...] also it's risks for a hacker audience." As I forgot the comma, ChatGPT started to write about the risks for hackers. Not what I intended, so I stopped ChatGPT and tried again. This attempt got stuck, but my third attempt succeeded, resulting in the text above. So with relatively small effort, I got an article I could try to publish in 2600 Magazine. From the beginning, it was clear to me that I wouldn't attempt to have this article written by an AI be published under my name.

Let's have closer a look at the text ChatGPT wrote. When I went through the text for the first time, it felt like it was written by the public relations department of ChatGPT. The AI is "cutting-edge," might "revolutionize" how we interact with machines. Its founders were "tech giants" and, in brief, it aims for a "betterment of society." It then points out the opportunities before talking about the risks. I wonder if it would have talked about risks if I hadn't specifically asked for it. But the risk is considered nothing special, it's just like "with any new technology." And - not having been asked for - ChatGPT also shows how these risks can be mitigated. The text as a whole is written in a rather positive language, containing expressions such as "betterment," "beneficial," "responsibly," or, well, "positive."

What I also noted is that ChatGPT took my given parameters seriously. The term "hacker" is the third word in the article. In the third paragraph, ChatGPT talks about the "history" before looking into opportunities and the risks. Where it did fail was for the length: it's only 576 words, and not between 800 and 1000. The single paragraphs are rather short, the conclusion being the longest with 91 words. This could be a hint on how ChatGPT generates articles: by writing single paragraphs covering a topic and then putting it together.

Synonyms don't seem to be a thing ChatGPT is very good at. Not only does it repeat the given keywords, but also phrases: the twofold "ethically and responsibly" appears twice. For the second example of both, opportunities and risks, it chose "Another opportunity" and "Another risk." If there would have been a third risk, would it have been rephrased? Having examined only a single article, I cannot tell. All of the above needs to be verified with further research, eventually showing a clear pattern on how ChatGPT writes articles.

Me personally, I have mixed feelings regarding AI. On the one hand, it's a very fascinating topic, a technology people could benefit from. On the other hand, I'm rather skeptical towards new technologies that are praised or are being taken as a solution for whatever problem mankind has, as every technology can be abused. I clearly represent the opinion that scientists should think about and be aware of their invention's impact. Nowadays more than often new technologies are being welcomed, and criticism is dismissed as preventing progress. Progress and money making is most times regarded as more important than clean and functioning products, as the failures of the presentations of Microsoft's Bing AI and Google's Bard once again proved. Regarding AI and ChatGPT, I would suggest some guidelines to make it more trustworthy.

Every use of ChatGPT should transparently be declared. The consumer then is aware of the true authorship of the product and thus can contextualize and interpret it in a much better way. This actually should be the case for every written product but, unlike human authors, I doubt that ChatGPT would accuse anybody of plagiarism, or even be aware of being plagiarized.

ChatGPT itself should be aware of its sources. This is one of the big secrets: where does it get its knowledge from? One of the first thing scholars learn in university is specifying sources. You just don't claim anything, but you rely on other people's work, like thesis and studies, and you transparently declare that you used these works as sources for your own work. This also helps others to estimate the degree of your work's credibility. We had these issues already in the past: articles have been published anonymously by unknown authors or even under wrong names to hide the actual intention of a text. Nowadays with social media, fake news spreads much faster, and with technologies like ChatGPT it can be created faster and in better quality than it used to be. But the technology helps both sides: those who abuse it for creating falsified content, not only written, but also fake images or videos; and hopefully also those who try to find possibilities to identify fake content with the help of AI.

That's where we, the hacker audience, comes in. We strive for the truth wherever we can, and thus we should support and search for solutions to identify and fight forgery. We should put hands on ChatGPT. Figure out how it works. Try to make it do things it's not intended to do. Bring it to its limits. Figure out adversarial attacks. Show the risks. Turing test it. Abuse it (for the good). Break it. Talk about it. Use it. Test it. Hack it. That's what hackers do.

;I totally agree here with Chat GPT, that considers itself a hacker: "As hackers, it is our responsibility to ensure that the development and use of ChatGPT are done ethically and responsibly to maximize its potential for positive impact on society."

What a nice phrase as a conclusion.