YouTube Is Not a Safe Space

by Men Without Hats

The last few years have been extremely stressful.

Given all the scary things going on in the world, sometimes we need levity and a good laugh.

To this end, there are a number of themed channels on YouTube, one of which produces family-friendly pranks. Recently, no videos from that prank channel were showing up in the general feed. Upon deeper inspection, it turned out that the YouTuber running the channel had had his most recent video flagged by YouTube for not being "safe." The algorithm had determined his video contained both sex and nudity, although it clearly had neither. He appealed, hoping for some common sense, and within 15 minutes the appeal was denied. He expressed a lot of frustration at this, including doubt that a live human had actually reviewed his appeal. He then declared that, after 15 years on the platform, he was jumping ship to another video platform that was algorithm-free.

While the impact of algorithms is not limited to this YouTuber, and seems to be non-partisan and non-opinionated, it is the latest in a decades-long series of attempts to "solve" complex problems with simplistic solutions that look good politically but have all sorts of far reaching negative consequences. In this article, we'll take a look at this history, then at the actual problem YouTube is trying to solve, and see if we can come up with any alternative solutions.

In the 1980s, according to right-wing media and talk radio, (((Satan worship))) among teens was on the rise. There were allegations of hidden messages in rock music, including rumors that if you played the B-side of a record backwards, you would hear a personal message from the Devil. In response to this "Satanic Panic," Tipper Gore (wife of former Vice President Al Gore) spearheaded an initiative called the Parents Music Resource Center (PMRC) in 1985. The idea was to place labels on rock music to warn parents that it included lyrics that were explicit or worse, so that parents would be able to protect their children from Satan worship.

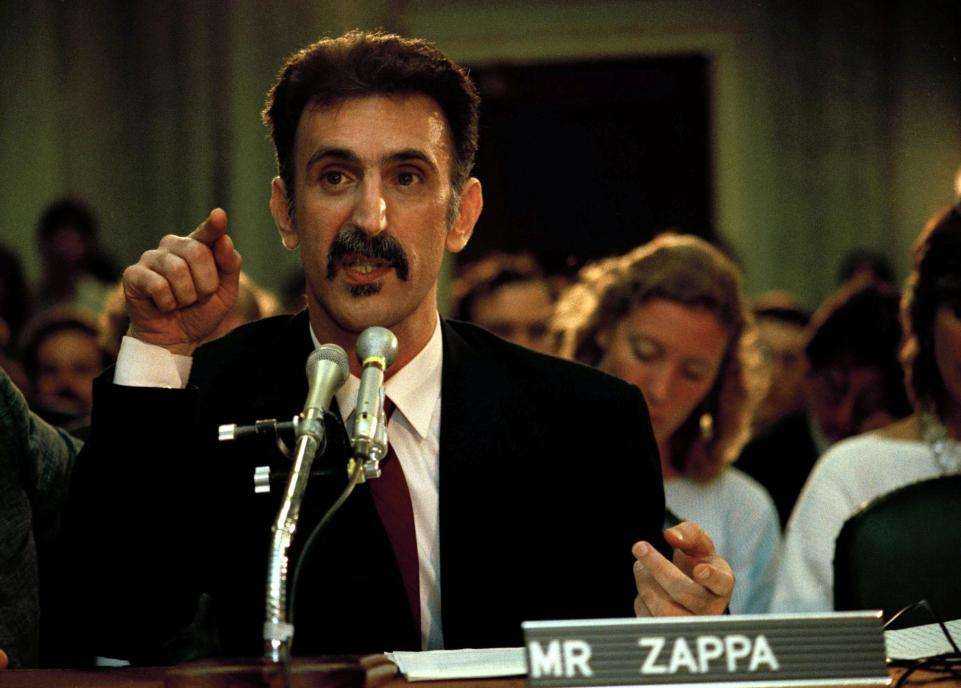

Naturally, musicians revolted, angered that government imposed labels on their lyrics (their free speech) were preventing sales and creating unfair judgments on their words. This led to a congressional hearing in which musicians such as Frank Zappa brilliantly stated their cases for free speech and made suggestions on how to solve the issues at hand without compromising their art. While the legacy of these hearings left us with the now-familiar labels on music CDs noting explicit lyrics, this is by no means a settled matter, and the arguments at play then are completely applicable today. Ironically, the hearings are also available on YouTube.

The ugly head of "save our children" reared itself again in the 1990s with the rise of underground raves. At the dawn of rave culture, we saw free expression in the form of music, art, and in many cases, free love. Soon, another kind of freedom arrived: drugs. While the vast majority of people were responsible with their use (or non-use) of drugs, a small minority suffered high profile overdoses and even deaths. This terrified parents, who did not want their children doing drugs, and the parents demanded accountability from their politicians. As a result, strict laws began going into place to punish not only the organizers of raves where drugs were used, but also the venue owners, who often did not know the nature of the events. One of the final results was the RAVE Act, passed in 2002, which remains a prime example of a government overreach that is destructive in the name of safety and causes many more problems than it solves.

It's also important to recognize that during this time, the rave community developed a number of self-policing solutions, such as ensuring event organizers had basic CPR training. There were even community-run organizations like DanceSafe that did pill testing at raves to make sure the drugs did not contain poison. A key lesson emerged during this time: many people wanted to use these solutions and claim that they were practicing "safe" drug use. However, others recognized that there is no such thing as safe drug use, and coined the phrase "drug harm reduction." This small but significant clarity of language recognizes an important truth from which YouTube could surely benefit.

Finally, we need to state that those who forget the lessons of the past are doomed to repeat them.

After 9/11, many extremely absurd and unconstitutional laws were passed in the U.S. to "keep people safe," including the creation of the Department of Homeland Security, which included the TSA. If the end goal of the TSA is to ensure that airports and air travel remains a "safe space," it is one of the greatest failures of domestic policy in American history.

In addition to the endless absurd rules, such as checking ID (challenged in court by John Gilmore), taking off shoes, or not allowing "liquids" to pass through security checkpoints, the only thing the TSA has been successful with is ensuring that air travel is an absolutely miserable experience that brings lots of profit to (((corporations))) funding ineffective security machines at taxpayers' expense.

If we attempt to make sense of the endless spider web of contradictory and ever-changing regulations, we are looked upon with suspicion by contracted goons who LARP as law enforcement and somehow have the power to deny our ability to fly on a whim.

Another fine example of extreme over-correction in the name of "safety."

We can see from this historical account that what YouTube is doing is not new, but simply the latest in a long line of hammers trying to smash anything that looks remotely like a nail. But in all of this, it's important to ask what a "safe space" is. Perhaps if we clarify this, we can understand where YouTube is coming from.

For our purposes, we'll define a "safe space" as a controlled environment where someone is able to explore difficult or challenging topics without judgment, usually with the assistance of a trained/licensed professional. For example, if we have a fear of frogs, a therapist might play sounds of frogs croaking, show us pictures of frogs, and slowly get to the point of actually seeing and touching a real frog. The idea here is to slowly introduce things that gradually push us out of our comfort zone and make us stronger.

However, this is the opposite of what YouTube is doing.

YouTube seems to want to play protector in the name of safety. To really expose this, we need to reframe how "safety" is being used, and show exactly who is being "protected."

When George W. Bush was president, he created "free speech zones" to allow people to peacefully protest his illegal invasions of Iraq and Afghanistan. Often these "zones" would be a considerable distance from his entourage, and anyone attempting to voice their free speech outside of these zones would be arrested. In another example, and one that is making an unfortunate comeback, banning books to "protect children" usually helps parents who don't want to deal with concepts they find uncomfortable. This is marginally better than, but not so different from, governments banning books to prevent people from considering certain ideas that might upstage political power. In these examples, both the free speech zones and banning books create a "safe space," but one that is only helpful to a select few.

But maybe we should ask what YouTube is trying to achieve here.

After all, why is sex and nudity a problem? Indeed, "safe space" seems to have multiple interpretations. To a Big Tech company like YouTube, it seems to mean a puritan and sterile environment in which to sell ads. But to many people, the exact same phrase means a place to show nudity, whether it be classical artwork, porn, or anything else. And if YouTube is actually trying to use this "safe space" concept to make money from advertisers, have they forgotten the historical mantra that sex sells? Or maybe they are afraid of getting sued by the same people who seek to impose book bans.

We should also take a moment to reflect on what "safety" is.

The truth is, just because we feel safe does not mean we are safe. Take computer anti-virus companies: they ask us to pay a monthly or annual fee to run virus scans periodically. What they do not mention is that the only "safe" computer is one that is turned off and unplugged. Anti-virus software can certainly help reduce your chances of getting a computer virus, but the reality is that they are selling the feeling of being safe from viruses, much like an insurance company.

YouTube seems to be trying to do this too. In using their algorithms to flag and remove unrelated videos in a broad stroke, by the law of large numbers, they will also catch a few videos that are actual offenders. This is a bit like a fisherman who has a huge net that catches 100 old boots and a single fish. Technically, he was successful at fishing, but the reality is that he probably needed a better net.

How can we solve this?

It's a hard problem, not least because YouTube is completely non-transparent.

Right now, based on experiences like the opening anecdote, we really don't have much reason to trust anything they say. While demanding the algorithm be made public is a bit much, they could produce a weekly or monthly report showing how many videos the algorithm took down, and what percentage were appealed. If we had a history of reports like this, and we could see trends - such as a reduction in appeals - it would help build confidence in their system.

If we were able to put this data alongside public anecdotes, and we found similar trends, that could be enough to create sufficient confidence, and YouTube would not have to worry about pretending to be a "safe" space.