Data Analysis as the Next Step

by Tim R

In 2600, you see a lot of pieces about reverse-engineering, systems knowledge, phone phreaking, and other interesting things a curious person can learn about.

In this piece, however, I'm going to advocate for a broadening of horizons, and suggest that data science and data manipulation get added to this list of topics. Take a moment to think through all of the data leaks that have forced important changes in culture and brought about world changing events: the "Collateral Murder" video, the diplomatic cable leak, details on PRISM, the Panama Papers, the Paradise Papers, and the list goes on and on.

While it's great that these pieces of information make it into the hands of journalists and academics who take the time to research and find the proverbial needle in the haystack, have you ever attempted the same? Perhaps you've taken the time to actually download these troves of data, but have you taken the time to sift through them yourself to see what speaks to your community or what poses danger to what you find important? I think the time is right to suggest that examination of data at scale is the next step you should be taking in your pursuit of the hacker ethos.

An anecdote might help motivate the discussion. In January of 2022, a convoy of disgruntled citizens established an occupation of downtown Ottawa as a way to protest what they believed to be unfair restrictions on freedoms through the implementation of vaccine mandates and enhanced procedures at border crossings. This occupation of Ottawa was a real mess, both figuratively and actually. Daily life came to a standstill and the government, both federal and provincial, as well as police, seemed reluctant to do anything about the situation. Taking a step back for a moment, clearly the lead-up to the convoy's appearance in the capital city of Canada required a build-up of people, support, and enthusiasm. It can be said without any surprises that creating such a moment as this requires commitment of all sorts and undoubtedly money to make it happen.

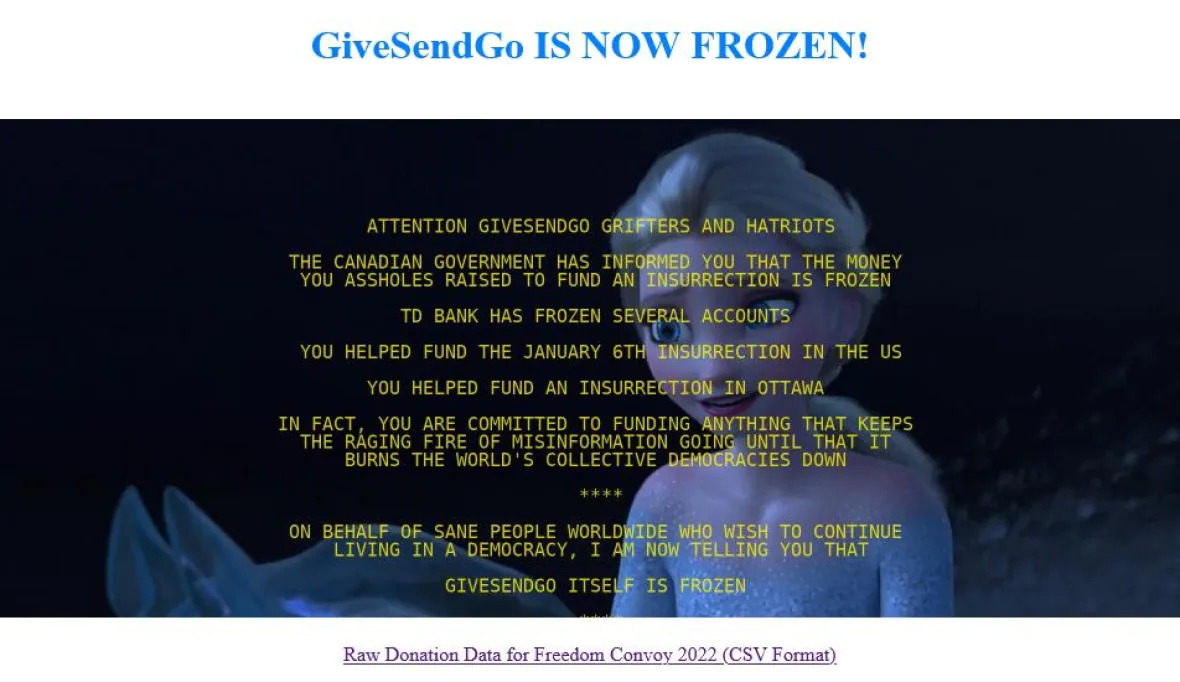

As is common today, the organizers of this movement took to crowdfunding to get the word out and to solicit donations. As the motivations of this group were a bit dubious, or at least not apparently clear where motivations fell, the initial crowdfunding campaign using GoFundMe was frozen. Looking to find a viable alternative, the organizers of the group moved to a lesser known, and probably poor choice, of GiveSendGo. If you've been paying attention to the news, GiveSendGo has a known history of poor security and a lax approach to site updates. Quite suddenly and with great surprise, the site redirected to an alternate domain, GiveSendGone.wtf. And, instead of a page outlining ways to give and totals to date, a page was presented stating that "GiveSendGo itself is frozen," and also presented on this page was a link to a 40 megabyte spreadsheet (convoy_donations.zip) of leaked donor information: name, email address, donation amounts, IP addresses, and anything else you could think of that would identify and provide insights about who contributed.

Here is where curiosity got the better of me. This development happened fast and, by the next morning when I finally realized something was up, I attempted to find the dataset. However, the hacked website was gone as was the spreadsheet. Curious and interested to see what was in that spreadsheet, I attempted to retrieve it by a generic web search and only found a series of different results analyzing portions of the data, all of which were very insightful and clever.1

In the days that followed, a veritable flood of analysis pieces were published. While all these works were great demonstrations of data journalism, I still felt compelled to continue digging to get exactly what it was that I wanted. The next step I followed was to find the Wiki page for the leak on Distributed Denial of Secrets.2

Once there, I was greeted with a message saying that those interested in the information would need to contact DDoS directly to request access. The presumed purpose of this step was to ensure that responsible use of the information was adhered to. I respect the rationale and motivation for such a move, but I was still curious. I wanted to see if anyone at the same organization as me contributed to the group by foolishly using their work email address. I also wanted to see if the funding was in fact coming from foreign bodies.

Enter the next step in the process, something that should be in the toolbox of anyone who spends time online: the Internet Archive Wayback Machine.3

This invaluable mechanism of Internet history is able to take snapshots of web pages over time and present these snapshots fully rendered in a sensible interface. The Wayback Machine is extremely useful for so many different venues of research and, delightfully, it throws a little bit of a wrench in the usual operation of the Internet, which usually functions as an actual memory hole. Within minutes, I was looking at a capture of the .wtf domain mentioned previously and was also in the possession of the spreadsheet I was so compelled to find.

Armed with the data, the next step, of course, comes down to how to analyze it. Here, yet another tool came into play.

Simply put, the Jupyter Notebook, made available via Anaconda4 or through various other means, such as Google Colab.

If you've spent time using Python for traditional programming projects or for scripting, this represents yet another paradigm that you can use your skills for. In short, it is a web page that allows you to embed chunks of Python code and render their output by executing the code in a specifically deployed virtual environment. A search for "Python Pandas" will provide the broad strokes of how to interact with data using this suite of tools. Another site worth consulting is Kaggle.5

It provides interactive tutorials on data manipulation using the Notebook paradigm. As an alternative, another platform worth considering is R, or more specially a cloud-hosted platform called RStudio. While just as powerful, it isn't my default as I spend so much time with Python that I don't want to lose my fluency with that language.

Now, armed with the data and the proper tool to explore it with, the fun could begin. Some simple first things to explore: comparing donation amounts based on postal codes in my community (think the Canadian equivalent of a ZIP Code), analyzing the full-text of donor comments to see just how seditious they might be, and finding out what IP addresses associated with government agencies might be on the donor list.

Bringing it back to the original thread, I hope this narrative compels us all to think of the full story of how a leak should play out. Not just the discovery and distribution, but also the analysis and evaluation. The hacker mindset is about discovery, curiosity, and ingenious thinking. Often we see this come to life by circumventing roadblocks imposed by DRM, or by helping disseminate information acquired by whistle-blowers at great risk to larger audiences and to the mainstream popular media. How often have you spent the time systematically working through a leak, or providing someone the tools and know-how to do something similar? I'm hopeful that the answer is something non-zero.

If this isn't compelling enough an example, I encourage you to dig into this idea of operationalizing leaks by looking at the technical tools that the International Consortium of Investigative Journalists6 have developed that are used to comb through terabytes of documents in order to find meaningful information that takes down despots and fights fascism.

These are marvelous tools built on top of large scale data analysis platforms, all open-source, that allow you to use your command line knowledge and Python skills to sift through vast amounts of data. Better yet, what about helping a local journalist or community group utilize these tools to make meaningful discoveries? There is a steep learning curve for people not acclimatized to working with computer systems, let alone large caches of files, to even make sense of how these tools can be used to serve the greater good, let alone to have the wherewithal to bootstrap these systems and to seed them with data.

Imagine handing over a refurbished machine, now air-gapped, running an instance of Ubuntu on it pre-loaded with gigabytes of data and an intuitive web interface as the way to interact with it to a person who is just waiting to be given the tools that will allow them to crack open a very important investigation.

Platforms like SecureDrop and end-to-end encrypted communication channels do wonders for allowing those who are vulnerable to get in touch with trusted organizations so that they can provide information on nefarious dealings, but what about the last mile? What about enabling and performing the analysis in the first place? This is a step that I hope is part of the hacker ethos that will continue to be important as inevitably more and more leaks surface.

ZAZLinks

- www.cbc.ca/news/politics/convoy-protest-donations-data-1.6351292

- ddosecrets.com/wiki/GiveSendGo

- archive.org/web/

- www.anaconda.com

- kaggle.com

- github.com/ICIJ