Hacking Motion Capture Software and Hardware

by Alan Sondheim

Hacking's most often associated with computers, social engineering, and so forth. There are many people working with glitch art as well as steganography, of course. For the past decade and a half I've been involved in hacking motion capture, in order to produce radically different Biovision Hierarchy Animation (BVH) files for animated avatars in virtual worlds,and in stand-alone videos made with Poser and other programs. In motion capture, sensors or reflectors are placed on the body of a performer; the output of the rigging is fed into a computer and transformed into a "behavioral model" paralleling the performer's movement. All well and good. But there are numerous ways to alter this and create amazing figures and movements almost beyond anything imaginable.

I began working in this direction years ago when I was given the opportunity for a residency in a virtual environments laboratory at West Virginia University in Morgantown. We found a disused motion capture setup that was quite old, even at the time; it worked through 18 sensors sending electromagnetic signals to an antenna that would locate them individually in space and time. The information was processed and output to an ASCII BVH file that could then form the basis for an animation.

We used this a couple of times and then began to experiment. I'm not a programmer, but I had an assistant who was, and I asked him to rewrite the interface itself. I thought that, just as there are various filters, for example, in Photoshop or GIMP that are mathematically defined, we might be able to create "behavioral filters" for mocap that would alter the output of the sensor mapping. My assistant located the mathematicals that governed the input-output chain and I noticed that most of the trigonometric functions were sines and cosines. Given the maverick behaviors of functions such as tangents or hyperbolic functions, we began substituting these in the equations. We were also able, of course, to change any governing constants. The results were amazing - avatar behaviors that were like nothing we had ever seen before. We basically had an interface that appeared to govern the representation of behaviors in new ways.

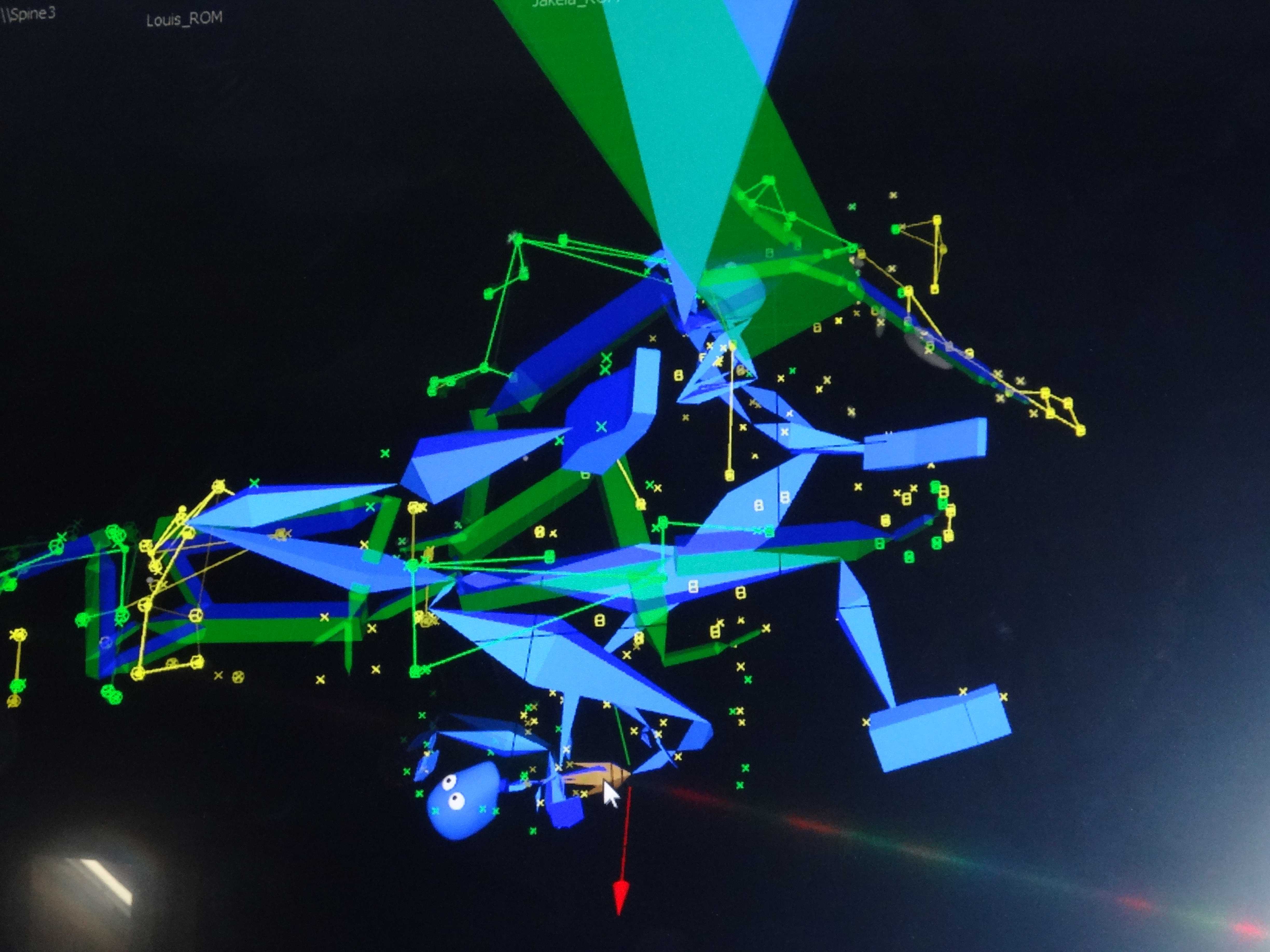

We didn't stop there; we also began working with the sensors themselves in order to create different body mappings altogether. This is something I've been exploring for years now at a number of different institutions. Random sensor placement is only of limited interest, but topological "smooth and/or broken" transformations work wonders. The remapping can be up-and-down, left-to-right, and so forth, but the sensors (on more complex mocap equipment with up to, say, 40 sensors) can be divided among several performers. If A wears the left-side sensors and B the right-hand ones, and then A and B separate, this "tears" the representation of the body; either the software breaks down, or the body appears to expand. If A and B rotate in opposite directions, the body "wraps" in layers; the trick is to stop the software input before breakdown. At one point we used trapezes in the studio, the sensors divided among four performers, and the results were incredible; at another point, we had the four performers student dancers) attempting to act coherently to create a single representation of a dancer that held together. The result was a dance created by the four performers themselves, in their constant adjustments to each other. (There were two outputs: video of the human dancers, and video of the single avatar dancer; these were combined.)

The trick with all of these things is to produce a coherent output that can be used elsewhere. Some of the files were fed into Poser; some were fed directly into representations (say, of a bull); and the ones used in virtual worlds (OpenSim, MacGrid, and Second Life) produced amazing dances that seemed completely unworldly. There were two broad types of dances from the files: ones in which the body twisted itself into impossible shapes, and ones in which the location of the body in the environment was also) impossible - instantaneous changes of position, rotations, and so forth.

In all of these things (I've worked at a number of places by now on invitations to use a university's mocap studio), social engineering is critical; a lot of technicians would keep trying to "correct" the mocap or say it wouldn't function properly or at all, and, in return, I would keep insisting that in my work there was no proper functioning; we'd experiment and see what would happen. All of these other places, by the way, had sealed software, of course; it was only at WVU that we had the amazing luck to hack the software itself.

These explorations have had many interesting results - new ways of thinking about modeling the body, new ways of creating and translating dance choreographies for performance, and a wide variety of behaviors and avatars for gaming, etc. The most sophisticated approach is hacking the software itself (instead of, or in addition to, remapping the sensors directly). Since there are numerous mocap kits available, this should be somewhat simple. Modules could be organized like SuperCollider programs, inserted and removed when necessary. The results are immediate and almost always satisfying.

Examples